I’ve been following this technology application for a while. It is super cool but still pretty nascent. The input image really has to be super clean to get decent results.

You can do these locally on your computer by installing SDXL along with the huggyface depth map controlnet. Unfortunately you need a relatively strong NVidia GPU to get it to work.

I am still learning all the jargon that is in use with AI image generation. The image data bases, filters, detailers all have their own jargon names: control nets, loras, diffusers, …etc.

I am amazed by the speed of this development. Its feels like it is improving at light speed.

For anyone curious, this is the level of detail you are getting out of the box. There are filters you can use with the AI software to slightly improve it as well as cleaning up the image with a 2d graphics software (removing background and other processes). You can also always clean up the 3d geometry with 3d modeling software. It can give you a really good starting point for a pattern.

I am still amazed you can get anything from a single 2d image to create a 3d bas relief.

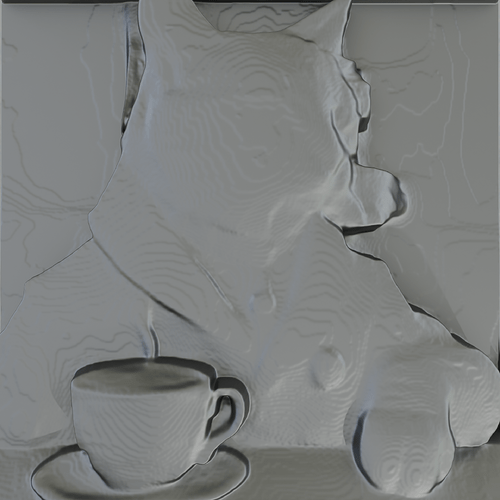

Image created in stable diffusion XL 1.0. Text prompt: cat in a coffee shop wearing a smoking jacket.

DAANNGG

This works so good!! I’ve been looking for a way to do this for ages. Thank you for sharing!!

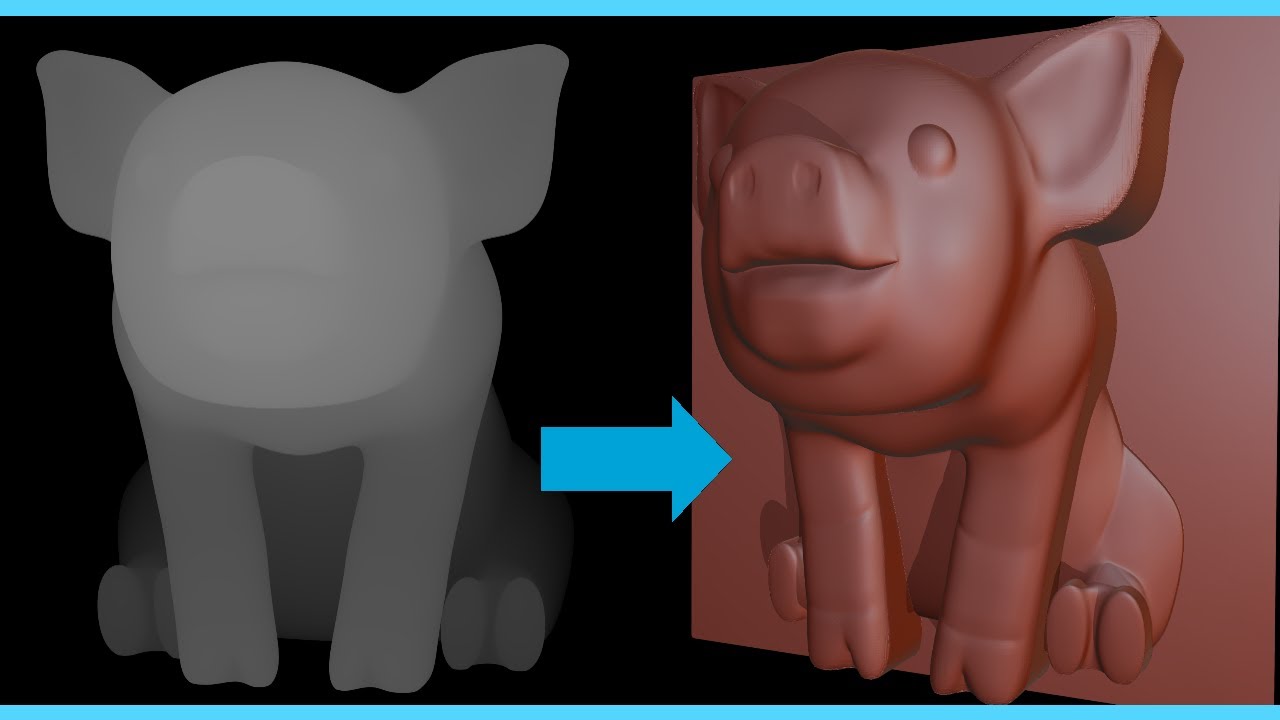

Here are my first results:

The midjourney prompt was

alabaster bas relief wall Frieze medallion of a deer fawn sitting in flowers cottagecore

I got the height map as described in the video.

Turned it into an STL using: Create a 3D Heightmap - ImageToStl

And took the screenshot in: SculptGL - A WebGL sculpting app

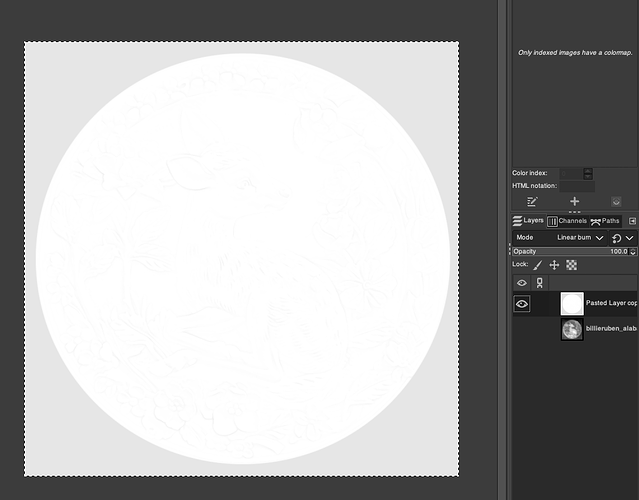

I worked out how to add some of the original detail back in using an image editor by using a high pass filter on the original image and added it as a linear burn layer to the height map, and fiddled with the levels a bunch until it looked like that white one.

oh, after the high pass I also ran a median blur set to 1px a few times, to smooth it out a bit.

@Billie_Ruben Welcome! Glad to see you here!

Thank you for sharing your experience with us. We enjoy all users feedback on how they achieve success.

Look forward to seeing more of your creativity!

This felt to me like a real demonstration of using AI as an artist using a tool. Thank you for sharing it! The hint of using the high pass filter to let detail through is great.

![]()

Wow, that is super cool. I work 99% in 3d so I am pretty weak with my 2d tools but your explanation was very helpful. Very easy to do once you know. ![]()

So a big thank you. Another tool in the toolbox.

Hopefully you don’t mind but I used your prompt in Stable Diffusion XL.

Raw output:

With image filtered in:

And after like 60 seconds of clean up in Blender3d, what the mesh looks like ready to be exported to STL. This would still need a lot of clean up but for something that took just a couple of minutes to do it is really crazy. To do this manually it would have taken me many hours to do

Again a big thank you.

I use Stable Diffusion XL (local installation), Blender3d/Zbrush, and Corel Paintshop Pro/CorelDraw.

Just an FYI in case you haven’t looked at it, Blender3d is a free opensource option to convert height maps to 3d geometry with a lot of control over the process. Downside is it has a steep learning curve to use all of it but on the plus side you only need 4 steps for basic height maps to geometry conversion and best of all you control the outcome.

I just realized this little exercise excited and scared me a little bit. lol

I’ve had an online cnc pattern store for about 10 years. Modeling from scratch, it takes me between 6 and 12 hours to make a decent pattern. Infrequently, I’ve been motivated to spend days on a pattern.

This model took about 20-25 minutes (and the deer is way better than the deers I have made in the past). Around 5 minutes to make the pattern and about 15 minutes to clean it up.

It truly removes a huge barrier for folks to jump into creating amazing stuff with little technical background.

I am excited to see what amazing art all the young and young at heart folks create in the next few years.

Here is a further cleaned up version for your carving fun.

And did a render using a scratched up grundgy material for your photo shopping fun.

I don’t know how real this is but its crazy if it is. Text to 3d CAD models.

Thank you! I do a lot of arty/crafty things, and I do see AI as an awesome way to cut out the boring bits, or trial ideas. I am excited for it.

I know a lot of folk are scared by it, and their fears are valid, in the same way that painters’ fears were valid when photography came along, but a lot of the fear really comes from the economic systems we find ourselves within, not the tool itself, IMO.

I think there are more and less ethical ways to use AI, and I’ve done a lot of deep thinking about it, even done some public debates. I’m excited for it. ![]()

I’m really glad that was enough info! I was a bit worried that I was so excited that I didn’t write enough of my method down, but what you have done looks incredible!!!

I definitely don’t mind you using the prompt! ![]()

I haven’t used much SD, do you like it?

I think like you, I see this as a great method to get a ‘starting point’, but still needs a bit of refining and detail, I agree. ![]()

I have used Blender a little bit! Even created some tutorials and videos on it, but I must admit I spend more time in Fusion 360 and SculptGL.

Do you know of any software that would let me edit the 2D heightmap using brushes and such similar to photoshop, and show me the result live, along side it, in 3D? Is that something I could set up in Blender?

Thank you!! Glad to be here!

Hi.

I started using SD XL because I could install it locally on my computer and create as many images as I want for free. It does take an NVidia graphics card with at least 4 Gb (more is better) so for me it was a no brainer. It is probably not as good as the paid AI image generator but it is good enough for me.

Blender is a polygonal modeling software so a bit different than CAD. But looking at SculptGL, you would not have any problems sculpting in Blender. A lot more powerful than SculptGL but you may or may not need all the capabilities.

I learned Fusion 360 in school but my brain is just not wired that way anymore after using polynonal software for so long (I started learning Blender 3d modeling in 2013). I am older so went to a 3d modeling/animation/VFX school after I retired to round out my skill sets a little better.

I am a Corel user and Paintshop Pro can edit 2d height maps but I don’t recommend it necessarily. It is cheap but its performance just doesn’t seem to be keeping up with Photoshop. I will still keep getting it but I am going to start looking at Krita more. I’ve played with it a little but it looks like it might actually have better performance than Paintshop.

Blender can do 2d and can use brushes but it is primarily a 3d software. Can it do it, yes, but there is better software for 2d editing. Caveat, unless you want to do 2d animation then its pretty decent.

For bas relief cnc patterns I use both 3d and 2d software to get to my final pattern. I sometimes go back and forth between the two. For higher density models I will go into Zbrush. A bit pricey for a hobby but it is the industry standard for digital sculpting.

This is the one of the processes (my channel) I use to convert grey scale for 3d mesh.

Looks like there is a race war right now on who can get the best depth map (bas relief) AI algorithm.

Microsoft, Google, Adobe, Tik Tok and who knows how many other agencies are working on this capability. Of course to package as part of their image filter packages that become a selling point to buy their hardware or service.

From the Youtube reviews it looks like TikTok (Depth Anything) has the current lead but I could not figure out how to install it into my Stable diffusion XL installation. I don’t know Python coding or Git so its all on me.

They are all impressive. Technology is moving on. Custom 2.5d patterns are going to be getting easier to do pretty soon (already).

Marigold - monocular depth estimation - depthmap with 100% 2d clean up only with just porting image height map to 3d geometry and render in Blender:

I think my cnc pattern store is dead. 4 minutes to generate this pattern.

1 minute to generate the ai image.

1 minute to generate the height map from the image using an ai depth map generator.

1 minute of clean up in a 2d graphics program.

1 minute of basic clean up in a 3d modeling software and export as stl.

Post Script:

Forgot to post the height map file for anyone that wants to carve it or play with it. This version is the one after the 2d clean up. It can be further cleaned up using 3d modeling software prior to export to stl or can be used for greyscale laser engraving.